Abstract

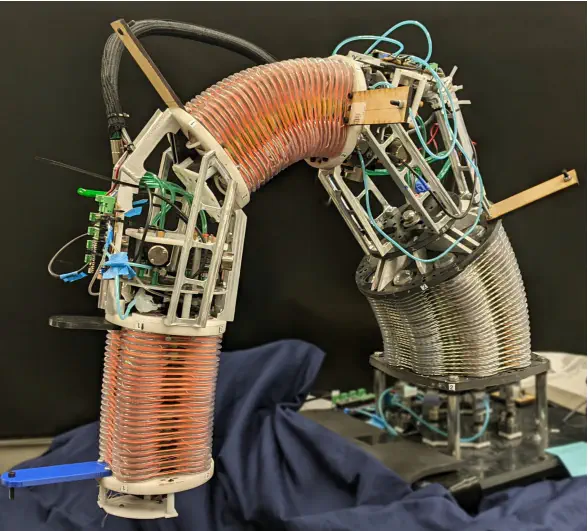

Unlike traditional rigid robots, soft robots offer more flexibility, compliance, and adaptability. They are also typically cheaper to manufacture and are lighter than their rigid counterparts. However, due to modeling difficulties, real-world applications for soft robots are still limited. This is especially true for applications that would require dynamic or fast motion. In addition, their operating principles and compliance make integrating effective proprioceptive sensors difficult. As such, state estimation and predictions of how the state evolves in time are challenging modeling tasks. Large-scale ($≈$ two meters in length), particularly fluid-driven, soft robots have greater modeling complexity due to increased inertia and related effects of gravity. Few approaches to soft robot control (learned or model-based) have enabled dynamic motion such as throwing or hammering since most methods require limiting assumptions about the kinematics, dynamics, or actuation models to make the control problem tractable or performant. To address this issue, we propose using Bayesian optimization to learn policies for dynamic tasks on a large-scale soft robot. This approach optimizes the task objective function directly from commanded pressures, without requiring approximate kinematics or dynamics as an intermediate step. We also present simulated and real-world experiments to illustrate the efficacy of the proposed approach.