Research Blog

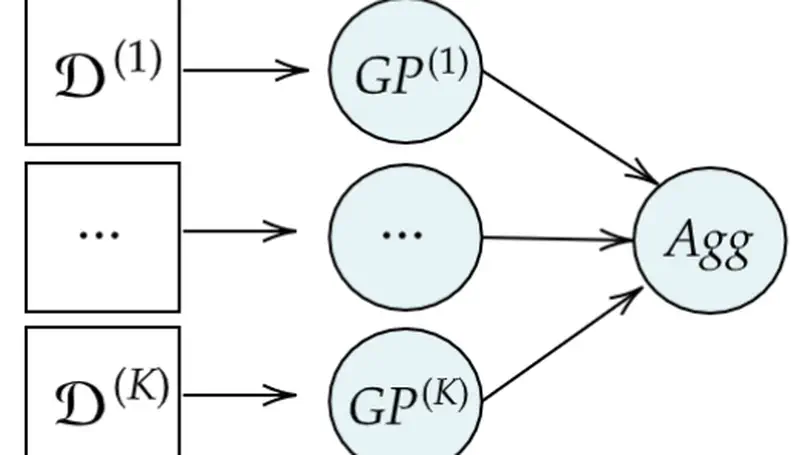

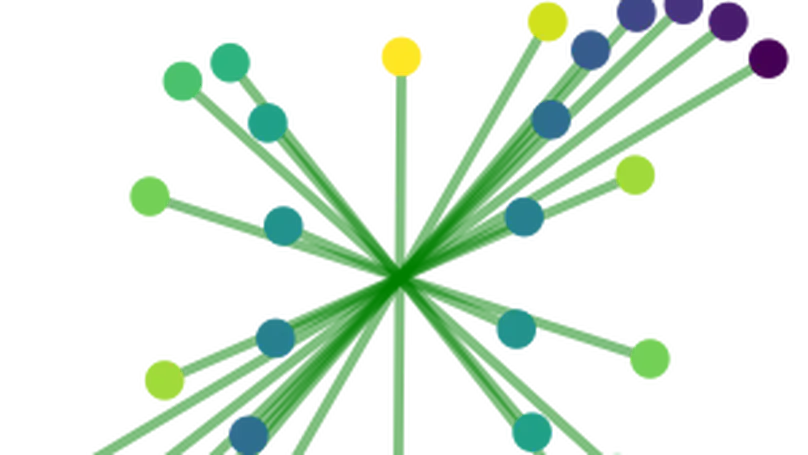

Meta-learning can make machine learning algorithms more data-efficient, using experience from prior tasks to learn related tasks quicker. Since some tasks will be more-or-less informative with respect to performance on any given task in a domain, an interesting question to consider is, how might a meta-learning algorithm automatically choose an informative task to learn? Here we summarise probabilistic active meta-learning (PAML): a meta-learning algorithm that uses latent task representations to rank and select informative tasks to learn next.

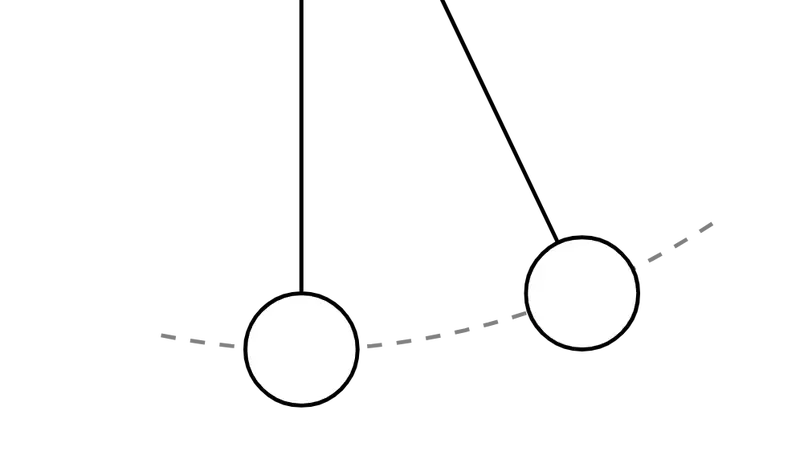

Learning models of physical systems can sometimes be difficult. Vanilla neural networks—like residual networks—particularly struggle to learn invariant properties like the conservation of energy which is fundamental to physical systems.

Learning models of physical systems can be tricky, but exploiting inductive biases about the nature of the system can speed up learning significantly. In the following, we will give a brief overview and the key insights behind variational integrator networks.

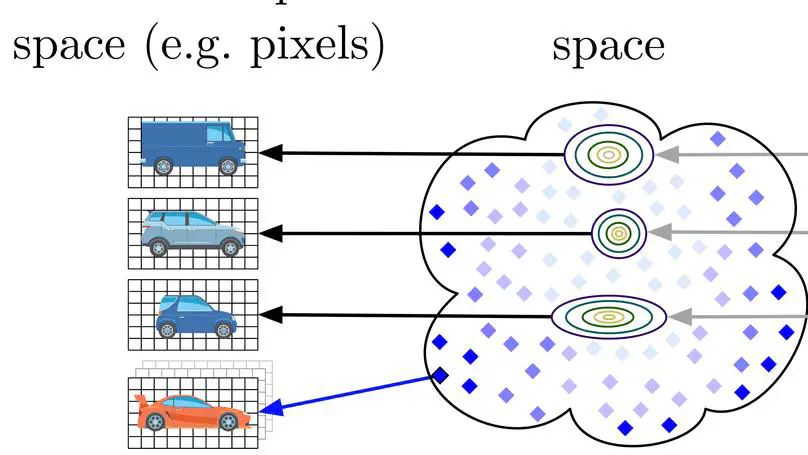

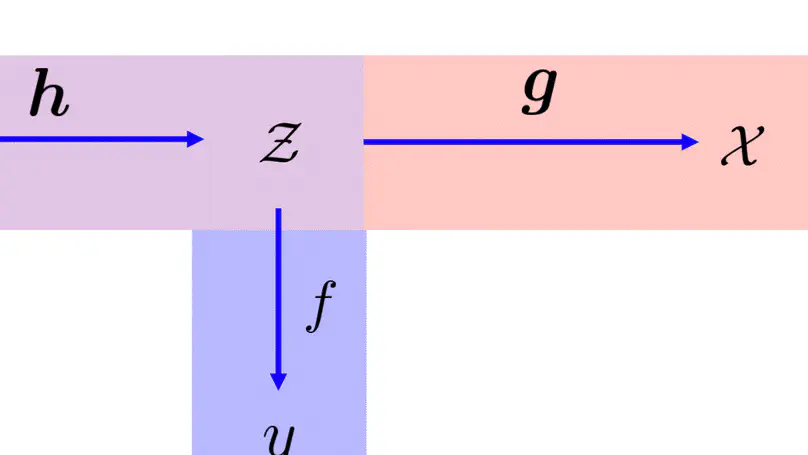

Bayesian optimization is a powerful technique for the optimization of expensive black-box functions, but typically limited to low-dimensional problems. Here, we extend this setting to higher dimensions by learning a lower-dimensional embedding within which we optimize the black-box function.

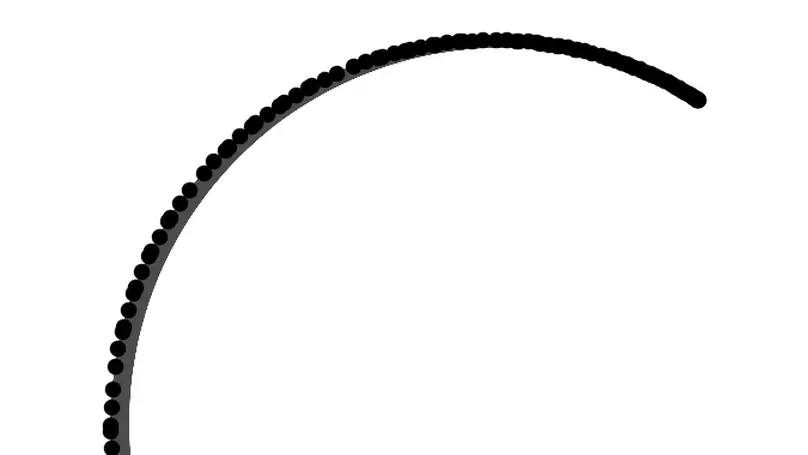

Data is often gathered sequentially in the form of a time series, which consists of sequences of data points observed at successive time points. Dynamic time warping (DTW) defines a meaningful similarity measure between two time series. Often times, the pairs of time series we are interested in are defined on different spaces: for instance, one might want to align a video with a corresponding audio wave, potentially sampled at different frequencies.

Barycenters summarize populations of measures, but computing them does not scale to high dimensions with existing methods. We propose a scalable algorithm for estimating barycenters in high dimensions by turning the optimization over measures into a more tractable optimization over a space of generative models.

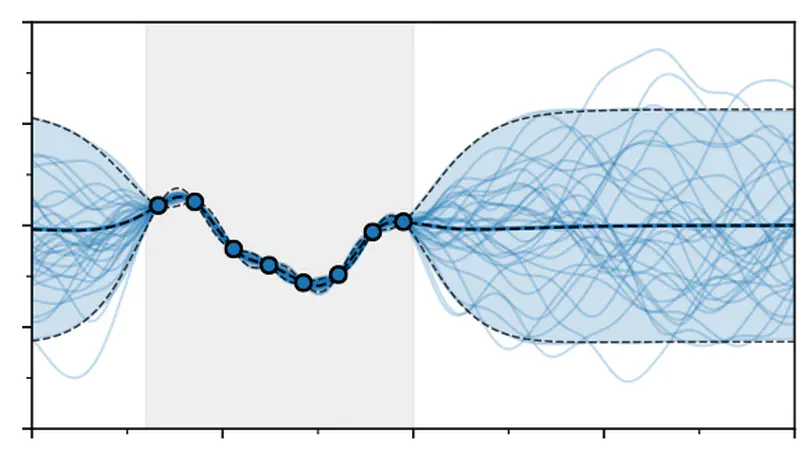

Efficient sampling from Gaussian process posteriors is relevant in practical applications. With Matheron’s rule we decouple the posterior, which allows us to sample functions from the Gaussian process posterior in linear time.